Photo by the blowup on Unsplash

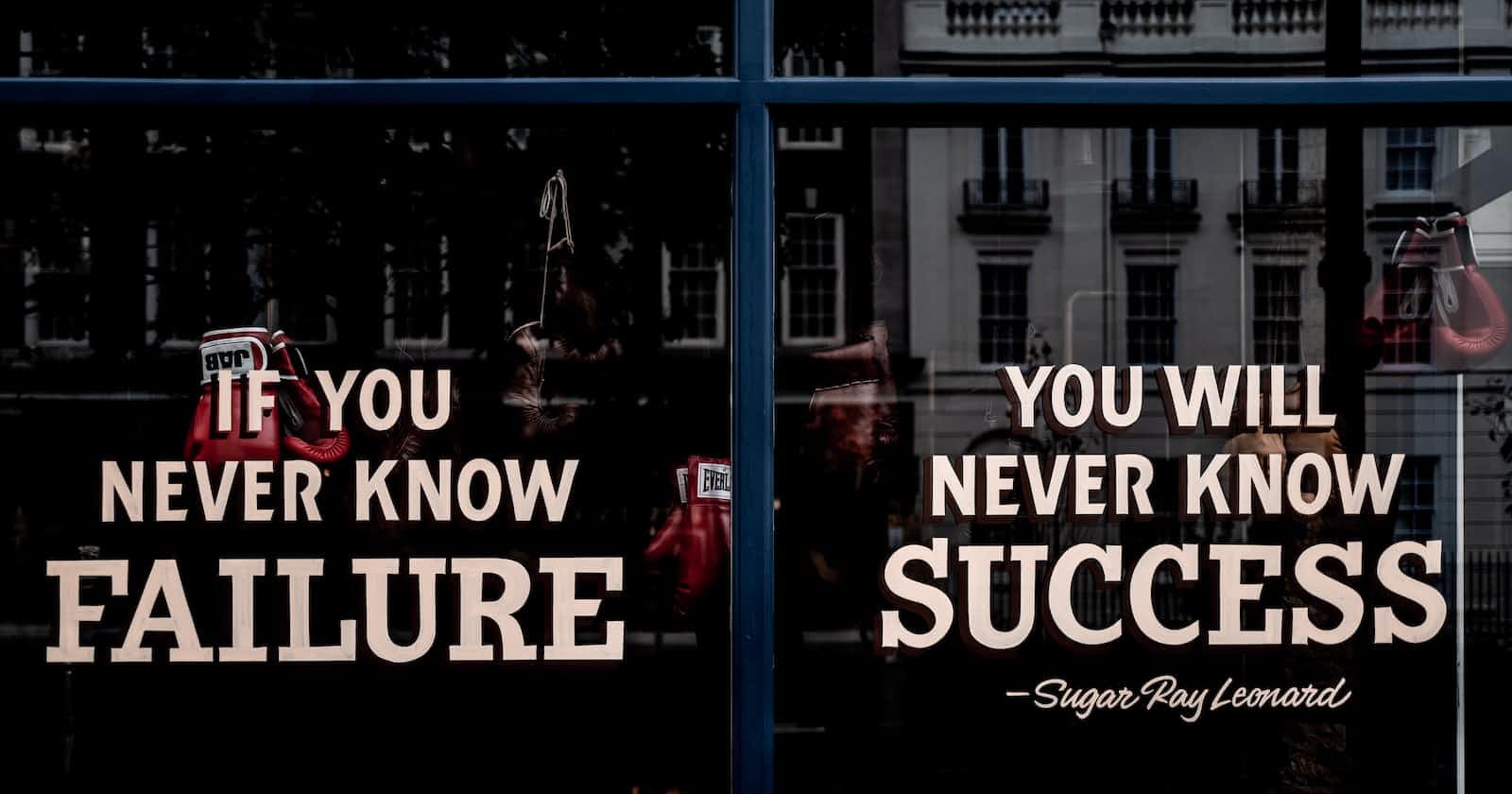

"Failure is always an option" - why failure can be a teacher:

Learning from failure using the Scientific Method and Mythbusters Logic

I've been having a pretty rough month of late: I got Covid halfway through moving house, I lost my job, had a bad interview for another job and, maybe worst of all, I broke a mirror 😆.

It's enough to get anyone down, and it did hit me quite badly, especially as they were all within the space of a couple of weeks. And it doesn't help that I'm quite hard on myself too. But I remembered a quote from the great show "Mythbusters" which went

"Failure is always an option!"

This, in turn, reminded me of an episode of "HTML All The Things" Podcast ([edit] I can't remember exactly which, but listen to them, they're great I was helped out by another listener of the show on Twitter @mrkarstrom who correctly found the episode, number 20 found here) where one of the two hosts said (and I'm paraphrasing)"We are taught in school that failure is bad. If you fail the test, that's bad. But that's not always true!"

In this blog post, I want to convince you that even if you fail, doesn't mean you are a failure (which is pretty much what had been repeating in my head the last couple of weeks). Failure can highlight some lessons you need to learn. They can help you become a better person/developer/father/husband etc. And some types of failure are actually good things and should be lauded rather than admonished.

Where did we get this notion that failure is bad?

So where does this idea come from that failure is a bad thing? As mentioned above, it was pointed out to me by one of the hosts of the "HTML All The Things" podcast that in school we are taught that failing is a bad thing. From a very early age, we are given tests and graded based on our performance. If we passed, we'd done well. If we don't pass, we've failed. This inherent and unintended lesson is deeply ingrained in us as children to the point that it affects our whole lives. There is a sad trend (which may be trying to counteract this lesson) that is even starting to appear in the UK, where end-of-year assemblies (or prize-giving day at my school) have the whole school being awarded for the smallest things. Whilst I'm all for encouraging young children, this tradition is a dangerous ground from which to build if not tempered with the bitter lesson of failure

My son just got the results of his A Level (end of high school, taken at 18 years old) exams. I was very proud and overjoyed that he got excellent results which allowed him to go on to university (only the second Ross behind my niece to do so).

I didn't go to University. My father, though very supportive, advised that he didn't feel I would benefit the most from it. At the time, I wanted to join the police and didn't need a degree to do that, so I happily agreed. As it turned out, I didn't get into the Police (too young and in need of experience) and, eventually, decided to go into IT, which - you guessed it! - needs a degree. In hindsight, I completely agree with my father's assessment. I would not have done well at University at the time.

The dangers of subscribing to the "Failure = Bad" model

"Results day", as it's come to be known in the UK, is such a nerve-racking time for students and parents, as these results quite literally decide the rest of their life (or at the least, the next 3-4 years). Had my son not gotten good enough grades, he would be seen as having "failed" his A Levels and "ruining" his future. He wouldn't have been able to get into many universities and would have found getting a job in IT (what he'll be studying) very difficult.

About 5 years ago, we went through the same thing when he attempted the 11 plus (an exam needed to attend certain, exclusive secondary schools). He didn't pass it and was very upset with himself, saying he'd failed and wasn't good enough. I was just chuffed that he'd tried it.

It's not just my own views and observations over the failure that bear this to be true, scientific research has also found that having such a hard line between failure and success is dangerous. This is ironic, as the scientific model is based on failure (more on that later). Writer Jeff Wuorio said that "Failure underscores the need to take chances... if you are taking risks, almost by definition, you are going to fail at some point." It's like the old saying/cliche says: Nothing ventured, nothing gained. Moreover, He said that failure can force you to rethink every assumption:

“When something goes wrong, not only do you consider the various means of fixing that particular problem, you notch up your thinking to identify those broader elements that may have led to the snafu and others like it. And down the line, that can mean solutions and adjustments before any further problems even crop up.”

There's another inherent danger to subscribing to this model which is called "The Blame Game". Harvard Business School professor Amy Edmondson states that "Every child learns at some point that admitting failure means taking the blame", and that this has caused society and in particular businesses to be less willing to move to a psychological culture of safety where mistakes can be learned from. In her research, Edmondson found that in businesses as diverse as hospitals and banks, the majority of failures were unreported:

"When I ask executives [...] how many of the failures in their organizations are truly blameworthy, their answers are usually in the single digits. But when I ask how many are treated as blameworthy, they say (after a pause or a laugh) 70% to 90%."

Why we should think differently about failure:

Photo by Roger Bradshaw on Unsplash

When Edmonson talks about "blameworthy failures", she describes a scale of failures that have varying degrees of blame attached to the failure. She describes 9 classes of failure divided into three categories like so:

- Preventable failures (most blameworthy):

- Deviance from the process

- Inattention to process

- Lack of ability

- Complexity-related failures:

- Faulty Process

- Task too difficult

- Process Complexity

- Intelligent Failures (least blameworthy):

- Uncertain outcomes

- Hypothesis Testing

- Exploratory Testing

What this suggested scale shows is that there are, of course, failures that are 100% because of bad consequences. If a bus driver ignores a red light because they are looking at their phone, they are to blame for not following the correct rules (in this case, using your phone behind the wheel of a vehicle). This would be an example of Deviance from the Process.

If that same bus driver didn't notice the red light, it would still be their fault and would be classified as an Inattention to Process. If they didn't even know how to drive a bus, then that would fall under the third point and last in the Preventable Failures group.

The second group are failures where the person isn't necessarily at fault, but were either following a bad process, didn't have the skills for the task given to them, or because the process is in itself too complex. The Three Mile Island nuclear disaster of the 1970s in America was eventually assigned this last category because the system was so complex, that no one would have been able to follow the process or prevent an error.

The final group are where we come back to my comment earlier about how science is grounded on the ability to fail well. If we think about the experiments at CERN, they are trying to create results they believe will happen, but also don't know the entire reality of it. This is a core concept of the scientific model which has been embedded into science for hundreds of years. The model, where a researcher says what they want to do, why and what they expect to happen, was baked into my GCSE Science lessons and what I based my Psychology coursework on for A levels. In science, there's no baggage attached to failing. A famous quote from Scientist Thomas Edison when he was trying to build the incandescent light bulb is:

"I didn't fail! I found 1000 elements that wouldn't work"

How we should learn from mistakes:

Whilst Edmondson wasn't trying to shift blame or make a person blameless for their actions, she did make a very clear point: Failures need to be learnt from properly.

As you may know, I went through a DevOps Bootcamp that finished earlier this year (see my other articles on that process). One of the key concepts of DevOps (and the agile methodology it subscribes to) is that software and/or business is iterated and improved on. Today, we're used to reporting a bug to a developer or company behind an app and having it fixed in a later release of the program, but this is a key point in the development life cycle. In the early years, updates to programmes would potentially be months or years down the line, potentially leading users to abandon an app because it wasn't fixed in time. Nowadays, the process is, usually, a lot quicker.

But this is one of the biggest advantages of failing. If Edison had not failed so many times, we would have a very different set of light bulbs today. If scientists didn't keep pushing the envelope and trying to prove (or disprove) their theories, our understanding of the world would be very different.

One of the oft-quoted examples of good learning from failure in most DevOps books is that of the Toyota Andon cord. This is a cord that hangs above all workstations at their plant, and when a worker comes across a problem, no matter how small, they pull the cord. This immediately gets one of the supervisors to look at the problem. If it's something that can't be fixed within 60 seconds, the entire production line is brought to a halt, regardless of cost, and all the workers 'swarm' the problem. In this case, too many cooks don't spoil the broth (or rather more heads are better than one), and once the problem is solved, production continues and the new lessons are embedded into the manufacturing process.

The ability to call a halt to the process and report a problem free of blame is the strategy that any business should follow for fear of losing valuable lessons that could prevent a disaster.

In a video about her paper, Edmonson talked about the Columbia Shuttle tragedy. The report that came out after the accident found that numerous "little" reports were ignored or not thought to be important. Unfortunately, it was the combination of all these "little nothings" that lead to the disaster and the cancellation of the shuttle program. Had those "little nothings" been seen too earlier, the tragedy could have been prevented.

How does this all relate to me as a developer?

So, how does all this knowledge on failure and how to learn from them relate to me as a developer? First, although I lost my job, it has allowed me to focus more on my studying, allowing me to get one (albeit small) step closer to my goal. Second, even if I did fail a job interview, it was good practice for any future interview I wish to do (in fact, I have one next week, and although the job still isn't what I'm searching for, it will give me good practice for "the one").

But it expands further than that too. Although I'm still early on my path as a front-end developer, I know enough now to start thinking about making my first website: my portfolio website. If I want to look professional and serious about my career in web development, I need to have a website. It's going to be a little rough around the edges at first (it's bound to, I haven't practised my skills much), but it will show what I could be capable of first. I intend to use this as an intelligent failure lesson; one that will (I hope) be classed as a Hypothesis Testing failure. I will try lots of things, which may not always work, but it will be an important step to show what I'm capable of and practice skills I already have in DevOps Deployment. My site will be iterated on and perfected over time so that eventually I will have a home base which I'm proud to call my own. One that I hope will also be linked to this blog.

Conclusion:

Just like the Mythbusters team, I am aware that failure is always a possibility, and in fact, one not to shy away from. Although failure can feel personal or debilitating at first, it's important to remember that above all else, it is a learning opportunity if viewed through the lens of the Scientific Model and one where blame needn't always be laid on your own (or others') feet.

Just like the Mythbusters team, I am aware that failure is always a possibility, and in fact, one not to shy away from. Although failure can feel personal or debilitating at first, it's important to remember that above all else, it is a learning opportunity if viewed through the lens of the Scientific Model and one where blame needn't always be laid on your own (or others') feet.

Use failures as teaching tools, and try to frame them in one of the three groups from Edmondson's article to help extract the most learning potential from them.

Happy coding!